🔍 TruthCheck | Fact-Verification for LLM output

How to use TruthCheck

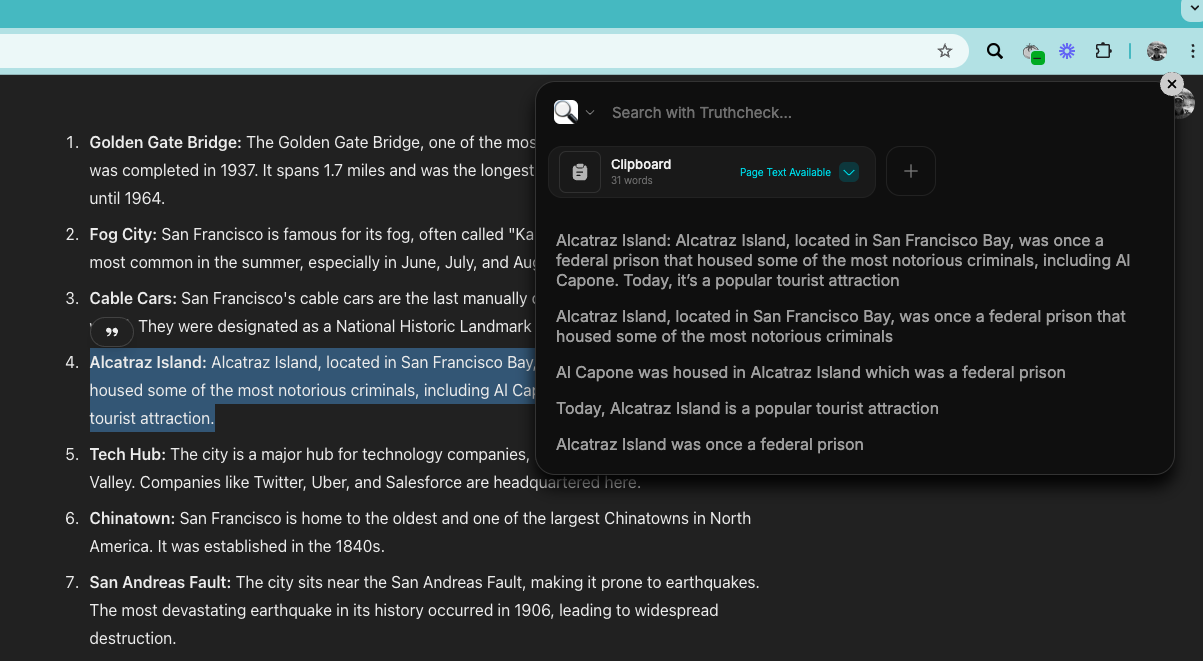

Launch Highlight to verify facts on your screen.

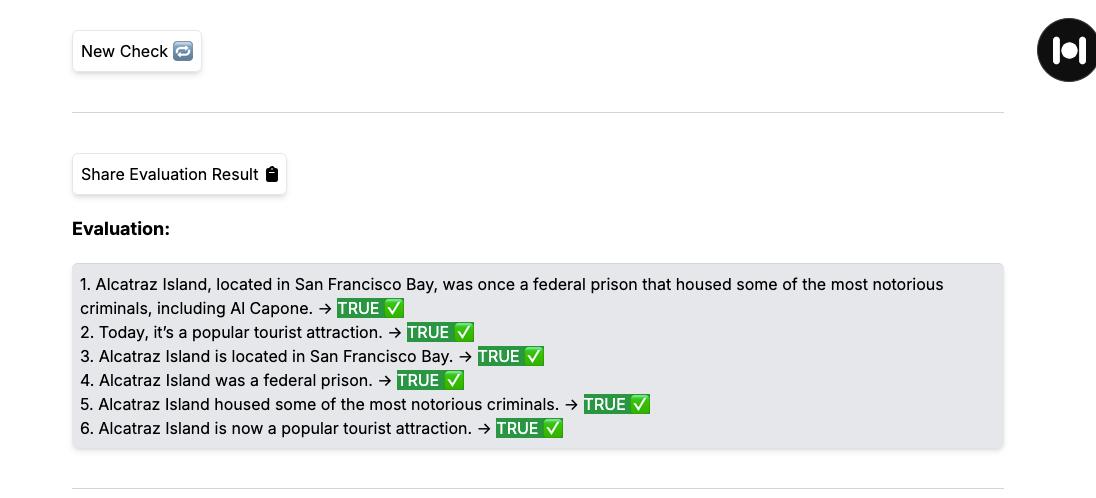

Receive evaluation result and check log insights for details.

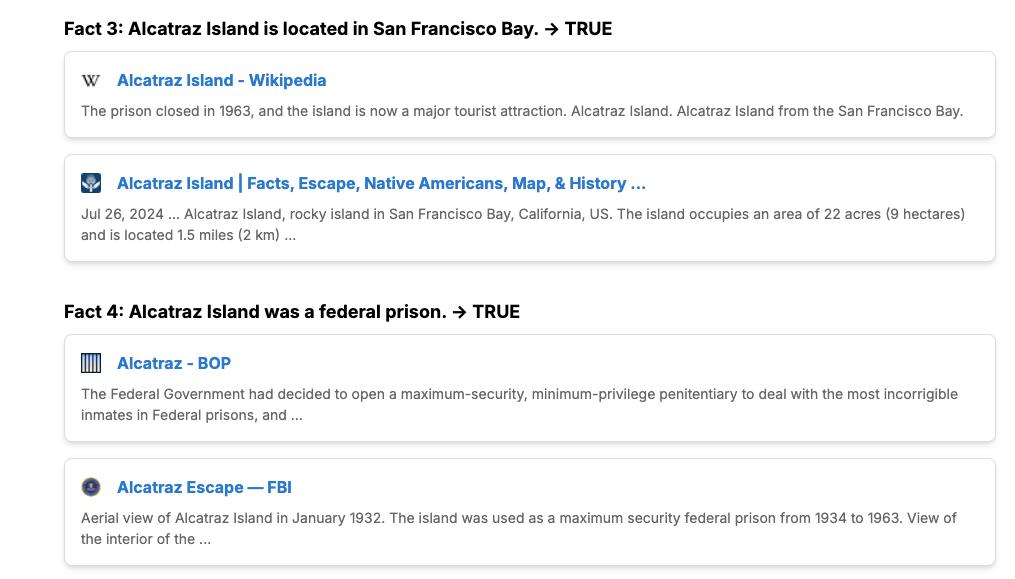

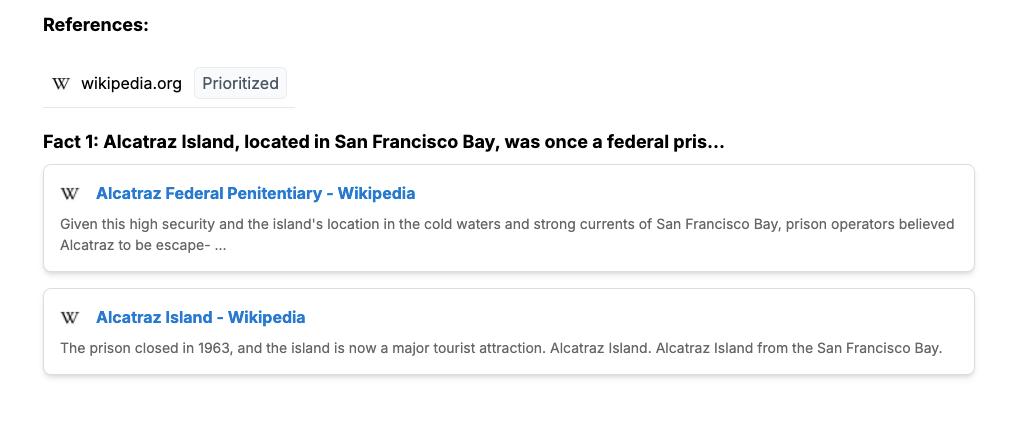

Get verified references.

You can add custom sources that will be prioritized.

Related Resources

- Oxford University Research on Hallucinating Generative Models

- High Rates of Fabricated and Inaccurate References in ChatGPT-Generated Medical Content

- GPTZero Perplexity Investigation

- TechCrunch on Generative AI's Hallucination Problem

- The Register Study on LLM Hallucination

- Nature Article on Advances in AI Reliability